Teradata recently gave me a technology update and a peek into the future of its portfolio for big data, information management and business analytics at its annual technology influencer summit. The company continues to innovate and build upon its Teradata 14 releases and its new processing technology. Since my last analysis of Teradata’s big data strategy, it has embraced technologies like Hadoop with its Teradata Aster Appliance, which won our 2012 Technology Innovation Award in Big Data....

Read More

Topics:

Big Data,

MicroStrategy,

SAS,

Tableau,

Teradata,

Customer Excellence,

Operational Performance,

Analytics,

Business Analytics,

Business Intelligence,

CIO,

Cloud Computing,

Customer & Contact Center,

In-Memory Computing,

Information Applications,

Information Management,

Location Intelligence,

Operational Intelligence,

CMO,

Discovery,

Intelligent Memory,

Teradata Aster,

Strata+Hadoop

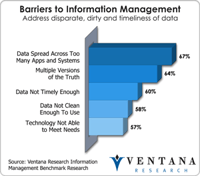

Data is a commodity in business. To become useful information, data must be put into a specific business context. Without information, today’s businesses can’t function. Without the right information, available to the right people at the right time, an organization cannot make the right decisions nor take the right actions, nor compete effectively and prosper. Information must be crafted and made available to employees, customers, suppliers, partners and consumers in the forms they want it at...

Read More

Topics:

Big Data,

Analytics,

Business Analytics,

Cloud Computing,

In-Memory Computing,

Information Applications,

Information Management,

Information Optimization,

Strata+Hadoop,

Digital Technology

Two key themes that emerged from Larry Ellison’s Sunday night keynote at this year’s Oracle OpenWorld were faster processing speed and cheaper storage. An underlying purpose to these themes was to assert the importance of Oracle’s strategic vertical integration of hardware and software with the acquisitions of Sun. I try to view technology keynotes like this from the perspective of a practical business user. Advancements such of these are important because enhancing the performance and...

Read More

Topics:

Big Data,

Customer Experience,

executive,

IT Performance,

Business Analytics,

Business Performance,

Data Management,

Financial Performance,

In-Memory Computing,

Information Management,

Business Process Management,

Data,

FPM

My colleague Mark Smith and I recently chatted with executives of Tidemark, a company in the early stages of providing business analytics for decision-makers. It has a roster of experienced executive talent and solid financial backing. There’s a strategic link with Workday that reflects a common background at the operational and investor levels. As it gets rolling, Tidemark is targeting large and very companies as customers for its cloud-based system for analyzing data. It can automate alerts...

Read More

Topics:

Big Data,

Data Warehousing,

Master Data Management,

Performance Management,

Planning,

Predictive Analytics,

Sales Performance,

GRC,

Budgeting,

Risk Analytics,

Operational Performance,

Analytics,

Business Analytics,

Business Collaboration,

Business Intelligence,

Business Mobility,

Business Performance,

Cloud Computing,

Customer & Contact Center,

Data Governance,

Data Integration,

Financial Performance,

In-Memory Computing,

Information Management,

Mobility,

Workforce Performance,

Risk,

Workday,

Financial Performance Management,

Integrated Business Planning,

Strata+Hadoop

Tableau Software officially released Version 6 of its product this week. Tableau approaches business intelligence from the end user’s perspective, focusing primarily on delivering tools that allow people to easily interact with data and visualize it. With this release, Tableau has advanced its in-memory processing capabilities significantly. Fundamentally Tableau 6 shifts from the intelligent caching scheme used in prior versions to a columnar, in-memory data architecture in order to increase...

Read More

Topics:

Data Visualization,

Enterprise Data Strategy,

Tableau,

Analytics,

Business Analytics,

Business Intelligence,

CIO,

In-Memory Computing

Interest in and development of in-memory technologies have increased over the last few years, driven in part by widespread availability of affordable 64-bit hardware and operating systems and the performance advantages in-memory operations provide over disk-based operations. Some software vendors, such as SAP with its High-Performance Analytic Appliance (HANA) project has been advancing with momentum, have even suggested that we can put our entire analytic systems in memory.

Read More

Topics:

Database,

Enterprise Data Strategy,

IT Performance,

Analytics,

Business Analytics,

Business Intelligence,

CIO,

Complex Event Processing,

In-Memory Computing,

Information Management,

Information Technology