Discussion about potential deployment locations for analytics and data workloads is often based on the assumption that, for enterprise workloads, there is a binary choice between on-premises data centers and public cloud. However, the low-latency performance or sovereignty characteristics of a significant and growing proportion of workloads make them better suited to data and analytics processing where data is generated rather than a centralized on-premises or public cloud environment. Intelligent applications that rely on real-time inferencing require low-latency data processing and compliance with data sovereignty and privacy regulations. The increasing importance of these applications presents a growing opportunity for data platform vendors to distinguish themselves from cloud providers with products that support data processing at the edge as well as on-premises data centers and the cloud.

The “edge” is a somewhat amorphous term that is more neatly defined by what it is not (on-premises data center or public cloud infrastructure) than what it is. Despite being commonly used as a singular noun, the term refers to a broad spectrum of potential locations and compute resources, including:

- Edge devices. Also referred to as Internet of Things devices, these include medical and manufacturing equipment, buildings, vehicles and utilities infrastructure with connected sensors and controllers.

- Edge nodes or servers. These include networking equipment, gateway servers and micro data centers that provide local data processing and caching close to the source of the data – such as within retail stores, factories, hospitals or other facilities.

- Regional data centers. These support application performance by providing connectivity between centralized on-premises or cloud data centers and edge nodes and devices.

Edge computing is particularly associated with IoT workloads with high data volumes and real-time interactivity. In addition to the distributed computing infrastructure required to support these workloads, a distributed data tier is also essential to enable the orchestration, management and processing of data in multiple locations. This delivers low-latency response times and minimizes the unnecessary movement of data to on-premises or cloud data centers.

IoT data processing typically includes real-time monitoring and processing of key metric data to identify and provide alerts on anomalies as well as filtering, transformation and batch or stream transfer of data to on-premises or cloud data centers for long-term storage, processing and analysis. A large proportion of IoT data is repetitive, so localized data processing can be used to maintain bandwidth by reducing the need to transfer and process identical records. Additionally, the rise of intelligent applications places increasing demands on organizations to deliver real-time inferencing of artificial intelligence and machine learning models at the edge. Although not all IoT workloads involve AI and ML, a growing number do, and we assert that through 2026, three-quarters of IoT platforms will incorporate edge processing as well as AI and ML to increase the intelligence in IoT.

The impact of intelligent application requirements is by no means limited to IoT workloads. Data-driven organizations continue to use specialist analytic and data science platforms to train models in on-premises or cloud data centers. However, the need for business processes that rely on these models — such as fraud detection — to be executed in real time means that leaders are increasingly bringing machine learning inferencing to the data (rather than taking the data to the ML models), enabling real-time online predictions and recommendations.

Consumers are increasingly engaging with data-driven services differentiated by personalization and contextually relevant recommendations. Additionally, worker-facing applications are following suit, targeting users based on their roles and responsibilities. Enterprises need to invest in local data processing of application workloads to deliver low-latency interactivity to meet customer and worker experience expectations across the globe.

applications are following suit, targeting users based on their roles and responsibilities. Enterprises need to invest in local data processing of application workloads to deliver low-latency interactivity to meet customer and worker experience expectations across the globe.

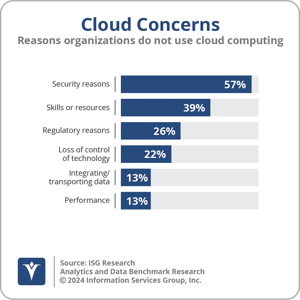

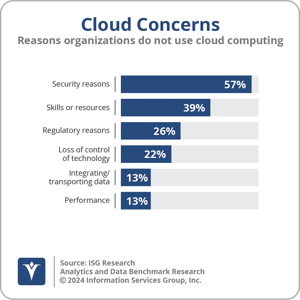

Ventana Research’s Analytics and Data Benchmark Research consistently illustrates that security and regulatory concerns are among the most common issues preventing the use of cloud computing environments. Maintaining compliance with data sovereignty and data privacy regulations prohibiting the transfer of data across national or regional borders is another major driver for investment in local data processing. This is typically served by traditional on-premises software and hardware, but cloud providers – including Amazon Web Services, Microsoft, Google, IBM and Oracle – have responded to the need for local data processing by introducing distributed cloud architecture. This is characterized by the deployment of cloud software and infrastructure in on-premises locations to support high-performance regional data processing. It is our assertion that by 2026, one-half of organizations will invest in distributed cloud infrastructure to standardize environments used in the public cloud as well as in on-premises data centers and at the edge.

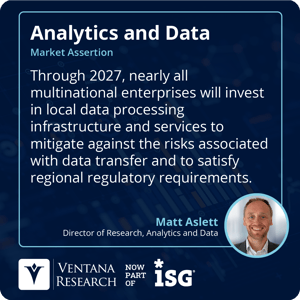

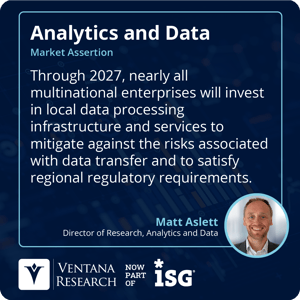

In 2023, several developments emerged from cloud providers related to the delivery of sovereign cloud environments that operate under restrictions that apply not only to the processing of data, but also ensure that customer metadata is only available to a cloud provider’s employees and systems — such as operational control, usage metering and billing systems — within a specific region. I assert that through 2027, nearly all multinational enterprises will invest in local data processing infrastructure and services to mitigate against the risks associated with data transfer and to satisfy regional regulatory requirements.

processing of data, but also ensure that customer metadata is only available to a cloud provider’s employees and systems — such as operational control, usage metering and billing systems — within a specific region. I assert that through 2027, nearly all multinational enterprises will invest in local data processing infrastructure and services to mitigate against the risks associated with data transfer and to satisfy regional regulatory requirements.

Many data platform providers have also taken steps to increase support for edge and local data processing requirements. Distributed SQL databases from vendors such as Cockroach Labs, PingCAP, PlanetScale and Yugabyte are specifically designed to provide scalability and resiliency that extend beyond a single data center or cloud instance, potentially including edge servers or devices. Meanwhile, NoSQL database providers such as Couchbase and MongoDB offer low-footprint database instances on IoT or disconnected devices with local data processing and synchronization at the edge node tier as well as centralized cloud database services.

On-premises versus cloud is a false dichotomy. The data architecture of the future will need to span a hybrid architecture of cloud and on-premises data centers as well as multiple local data processing environments and edge devices. I recommend that enterprises be cognizant of the ability of data platforms to support distributed data processing requirements when evaluating potential providers.

Regards,

Matt Aslett

applications are following suit, targeting users based on their roles and responsibilities.

applications are following suit, targeting users based on their roles and responsibilities. processing of data, but also ensure that customer metadata is only available to a cloud provider’s employees and systems — such as operational control, usage metering and billing systems — within a specific region.

processing of data, but also ensure that customer metadata is only available to a cloud provider’s employees and systems — such as operational control, usage metering and billing systems — within a specific region.